Dr Eilidh Noyes

Lecturer in Cognitive Psychology

Dr Eilidh Noyes has researched extensively in the field of algorithm and human face recognition accuracy and here she comments on the ban on the use of facial recognition technology by legislators in San Francisco.

“San Francisco has voted to ban facial recognition technology. Automatic face recognition systems will remain in place at federal operated security borders.

Automatic face recognition systems are ubiquitous in security scenarios. These systems, if used correctly, have the potential to reduce fraud, and increase the number of criminal identifications. However, automatic face recognition systems have been met with backlash. There are concerns over privacy issues, and over the accuracy of these systems. Strict data handling procedures and legislation must be established to ensure that privacy is protected.

The San Francisco face recognition ban has highlighted the importance of transparency over the role of these systems. Whilst an algorithm can unlock a personal electronic device with a high degree of autonomy, in security situations these algorithms assist in the identification procedure. A human operator makes the final identification.

The BBC report states concern that ‘these systems are error prone, particularly when dealing with women or people with darker skin’. These concerns are valid, and originate from issues with algorithm training data. Algorithms are optimised to the images that they are trained upon. White male faces overpopulate the training sets. Training sets consist of millions of face images, often with thousands of images of each identity, and are typically images of celebrities and politicians gathered from the Internet. This over representation of white male faces reflects a much wider issue of gender and race imbalance within politics and the celebrity culture. This other-race effect is not unique to algorithms. Humans also experience other-race effects, and unlike algorithms, it is unlikely that we can re-train humans to address this issue with a new image training set. Human face recognition is optimised to the faces that were encountered during childhood. The other-race effect has (rightly) received a great deal of attention from algorithm research. However, this problem must not be overlooked as an issue for human face recognition, particularly as the vast majority of forensic facial examiners are white males.

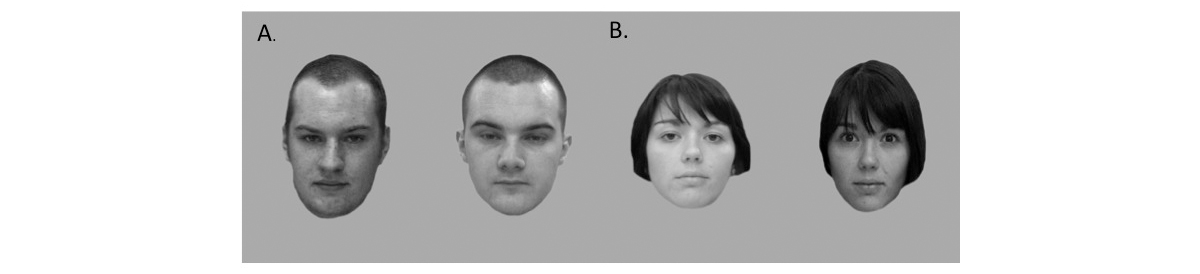

Before ruling out algorithms, we must consider, what is the alternative? In situations where algorithms are not used, the identity decision lies entirely with humans. Humans are extremely good at recognising familiar faces – images of their friends, family, or celebrities. However, unfamiliar face matching, such as faces encountered in a border control scenario, is highly error prone. When people are asked to decide if two images are of the same person or not, errors are made on around 20% of trials (e.g. Burton et al. 2010). In a test that resembled the passport control face-matching scenario, Australian passport officers were no better at the identification task than undergraduate students. Years of training and experience made no difference to the officers’ performance (White et al. 2014).

How does performance of algorithms compare to that of humans? A recent study revealed that state of the art face-recognition algorithms outperformed the average human at an identity matching task. Algorithm performance was comparable to that of the top performing humans, i.e. super-recognisers (see Noyes, Phillips & O’Toole, 2017 for a review) and forensic facial examiners (Phillips et al. 2018). Highest identification performance was achieved by combining the identity judgments made by humans with the identity judgments from the algorithm.

If an algorithm is better than the average human, this raises questions over attitudes towards both algorithm and human face recognition accuracy. It is important to assess and improve human face recognition procedures, as well as current algorithm performance.”

References

- Burton, A. M., White, D., & McNeill, A. (2010). The Glasgow face matching test. Behavior Research Methods, 42(1), 286-291.

- Noyes, E., Phillips, P. J., & O’Toole, A. J. (2017). What is a super-recogniser. Face processing: Systems, disorders and cultural differences, 173-201.

- Phillips, P. J., Yates, A. N., Hu, Y., Hahn, C. A., Noyes, E., Jackson, K., ... & O’Toole, A.J (2018). Face recognition accuracy of forensic examiners, superrecognizers, and face recognition algorithms. Proceedings of the National Academy of Sciences, 115(24), 6171-6176.

- White, D., Kemp, R., Jenkins, R., Matheson, M. and Burton, A.M., "Passport officers’ errors in face matching." PloS one 9, no. 8 (2014): e103510.

Dr Eilidh Noyes is a Lecturer in Cognitive Psychology at the University of Huddersfield

Computing

Browse all our blogs related to Computing.

Forensic Science

Browse all our blogs related to Forensic Science.

Law

Browse all our blogs related to Law.

Science

Browse all our blogs related to Science.

Society

Browse all our blogs related to Society.